Edge Computing Opportunities and Frustrations

Balancing quality and quantity of edge data presents a difficult challenge.

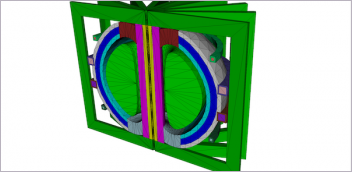

Analog Devices offers sensors to monitor equipment conditions. Image courtesy of Analog Devices.

Latest in Internet of Things IoT

Internet of Things IoT Resources

Dassault Systemes

Latest News

May 1, 2019

In real life, we prize the judgment of people who can recognize their own innate biases and prejudices and are willing to be transparent about them. Can a sensor be built to behave with the same integrity? Alternatively, can a sensor be built to warn you when it has reasons to believe the data it has collected may be flawed, unreliable or questionable?

Tony Zarola, general manager of Analog Devices, thinks it’s imperative that we build sensors that way. After all, in the coming era of autonomous vehicles, we’ll be relying on them to navigate our cars and our loved ones to safety. He calls the integrity-like characteristic in sensors “sensor robustness.”

“We’ve been making sensors for a long time, so we understand how our sensors detect and measure information,” he says. “We design and calibrate [the sensor] so that it can reject data like vibration from a gravel road, for example. That translates to sensor robustness. We understand what can impact this robustness. So we’ve made the sensor to be immune to the mitigating factors. And in times when the sensor is not confident in the data it has collected, it will let you know.”

In dealing with edge device sensor data, engineers and data scientists grapple with not only the volume but also the quality of the data. Here, according to the observation of many industry insiders, all roads lead not to Rome but to machine learning (ML) and artificial intelligence (AI).

Do You Trust your IMUs?

At present, carmakers have successfully put vehicles on the road that fall into what the Society of Automotive Engineers (SAE) describes as Level 2: Partial Automation. Many carmakers are also contemplating skipping Level 3: Conditional Automation. Instead, they may aim straight for Level 4: High Automation or Level 5: Full Automation. (For more, read the article “Leveling Up in Autonomy,” page 12.)

“Level 3 presents an interesting challenge because, in some circumstances, the vehicle needs to hand the control back over to the driver. This is an area of uncertainty,” explains Zarola. “The questions are: How much time does the system have before the human takes over? What does the system do while it waits for the human to take over? Remember, this period could be 10 to 15 seconds.”

As Zarola sees it, while the autopilot or the advanced driver assistance system (ADAS) is waiting for the human driver to take over, the burden to keep the car navigating safely falls on the inertia measurement units (IMUs). “The IMUs have to be of a certain performance level so it can handle that period,” he says.

Anticipating Level 4 and 5, Zarola and his colleagues plan to bolster their radar systems’ resolution. The goal is to enable object detection. “We’re also looking to reduce reaction time, in terms of objects coming at the vehicle from an orthogonal angle,” he says. “Cameras may take many frames to determine what it sees before it can react.”

While testing its large animal detection system, Volvo discovered the program didn’t know how to deal with kangaroos (“Volvo admits its self-driving cars are confused by kangaroos,” The Guardian, June 2017).

A fully autonomous Level 5 vehicle, in Zarola’s view, is still in the far future. “We still have some way to go in terms of data collection to improve sensor fusion and using AI to recognize objects,” he says.

Retrofitted IoT

For many manufacturers, replacing legacy machines with a new batch of costly connected devices is not practical. Therefore, the solution rests with retrofitting—placing sensors on old devices to begin gathering intelligence. This is a low-barrier entry to the Internet of Things (IoT) era.

“In the future, more and more manufacturing equipment providers will offer smart machines with built-in sensors and services, but it’ll take time before these smart machines go into operation or reach the field. In the meantime, you’ll have a mix of machines that need retrofitting, and machines that are already outfitted with sensors,” says Julien Calviac, head of strategy for EXALEAD at Dassault Systèmes.

“In condition monitoring of motors, the package itself influences the translation of the data,” Zarola points out. “Even the materials, the way you connect the sensor to the motor, and the adjacent machineries could corrupt the data.”

But even in the era of IoT, the I (internet connectivity) is not always a given. “On a train going through a tunnel or a piece of equipment in a mine, you can’t always be connected, so if you’re logging data from a device like that, if the monitoring algorithm needs to connect to the network to operate, you might have to consider that in your choice of the edge,” warns Seth DeLand, product marketing manager, data analytics, MathWorks.

Machine Learning’s Dilemma

Collecting data from edge devices gives you a wealth of data—hourly temperatures and vibrations from a factory equipment or the daily wind loads a wind turbine is subjected to, for example—but not necessarily an easy path toward failure analysis or product design improvement.

The first hurdle is most likely the data quality. “The data from the devices isn’t going to be as clean as the data engineers are used to getting from a physical test or a lab,” says DeLand. “It might include, for example, a chunk of data resulting from someone bumping on a sensor. There might be missing time slices in the data because the equipment broke down. It often requires a significant amount of cleanup and preprocessing before you can use it.”

Many firms catering to connected device makers propose AI-driven software and methods as the ideal way to tackle the vast amount of edge data, to find meaningful patterns and insights in it. But that too is a double-edged sword.

“The data you collect is usually not rich enough for AI to learn, not to mention deliver on users’ expectations. For AI to learn enough and provide not just predictions but also prescriptions, you need more context information,” says Calviac. “You need to have not only field data from the edge devices but also data from the engineering department (thresholds, rules, requirements), process information from manufacturing execution systems and simulation data.”

For data analysis, Dassault Systèmes offers EXALEAD, originally a semantic-based enterprise search engine. The technology has evolved into an information intelligence engine, that is AI-powered, and capable of crawling, discovering and classifying all types of data, from text-based requirements and field reports to 3D engineering models, products data, bill of material (BOM) graphs and IoT, to support users with decision support to improve operations performance and accelerate innovation.

“With data streaming in from multiple sensors, you can’t visualize it to make sense of it. So you might need ML to help you understand the patterns,” says DeLand. “But ML is garbage in, garbage out. If the data you use to train and develop the model is flawed, then your resulting model is flawed.”

In the best scenario, ML produces a code or model you can use with confidence. Here, too, is a catch: “You want to avoid the black box dilemma,” cautions Calviac. “The AI will deliver you a model, but recommendations provided by that model may not be easy to understand or be trusted by experts and end-users.”

Sensing Oversteering

One successful example of using sensor data for safety and design improvement is recorded by BMW Groups in October 2018. To understand the complex factors that contribute to oversteering, and to develop a way to detect oversteering, BMW Group decided to equip a BMW M4 with sensors and drive it on a track, logging when the vehicle was in an oversteer situation. What resulted from the test was 200,000-plus data points in less than 45 minutes. (“Detecting Oversteering in BMW Automobiles with Machine Learning”).

Working in MATLAB, the BMW team developed a supervised machine learning model as a proof of concept. Even with little previous ML experience, they were able to complete a working electronic control unit prototype capable of detecting oversteering in just three weeks, as stated in the article.

The BMW team loaded the data collected with the Classification Learner app in Statistics and Machine Learning Toolbox to train machine learning models using a variety of classifiers, providing initial results that were between 75% and 80% accurate. After cleaning and reducing the raw data, the process was repeated and classifier accuracy improved significantly, to over 95%, according to the article.

More Analog Devices Coverage

More Dassault Systemes Coverage

More MathWorks Coverage

Subscribe to our FREE magazine, FREE email newsletters or both!

Latest News

About the Author

Kenneth Wong is Digital Engineering’s resident blogger and senior editor. Email him at kennethwong@digitaleng.news or share your thoughts on this article at digitaleng.news/facebook.

Follow DE